Threads's "Federation"

It's the holidays, and you know what that means. … I've had enough time off from programming professionally that now I want to do some of it for fun!

I previously wrote a tool that syncs statuses from Mastodon to my FeoBlog instance. I was thinking about making some updates to that, and then I remembered that I'd read that Threads got around to testing their federation support.

Maybe I can update my tool to also sync posts from Threads, then? Let's test that out and see how their interoperability is coming along. (Spoiler: So far, not great.)

Nushell's built-in support for HTTP requests, JSON, and structured data makes it pretty nice for doing this kind of experimentation, so that's what I'm using here. Let's start by fetching a "status" with the Mastodon REST API:

def getStatus [id: int, --server = "mastodon.social"] {

http get $"https://($server)/api/v1/statuses/($id)"

}

let status = getStatus 111656384454261788

$status | select uri created_at in_reply_to_id

This works, and gives us back (among other data):

╭────────────────┬──────────────────────────────────────────────────────────────────────╮

│ uri │ https://mastodon.social/users/Iamgroot11/statuses/111656384454261788 │

│ created_at │ 2023-12-28T05:26:57.877Z │

│ in_reply_to_id │ 111656368951064667 │

╰────────────────┴──────────────────────────────────────────────────────────────────────╯

So does this, even though that status is coming from a different server. (Yay, federation!)

let status2 = getStatus $status.in_reply_to_id

$status2 | select uri created_at in_reply_to_id

╭────────────────┬───────────────────────────────────────────────────────────────╮

│ uri │ https://spacey.space/users/kmccoy/statuses/111656368910605303 │

│ created_at │ 2023-12-28T05:23:00.000Z │

│ in_reply_to_id │ 111656361314256557 │

╰────────────────┴───────────────────────────────────────────────────────────────╯

We can use the ActivityPub API for retrieving objects from remote servers to confirm that the version we got from our server matches the one published by this user:

def getActivityStream [uri] {(

http get $uri

--headers [

accept

'application/ld+json; profile="https://www.w3.org/ns/activitystreams"'

]

| from json

)}

let remote_status2 = getActivityStream $status2.uri

$remote_status2 | select url published inReplyTo

╭───────────┬──────────────────────────────────────────────────────────────────╮

│ url │ https://spacey.space/@kmccoy/111656368910605303 │

│ published │ 2023-12-28T05:23:00Z │

│ inReplyTo │ https://www.threads.net/ap/users/mosseri/post/17928407810714224/ │

╰───────────┴──────────────────────────────────────────────────────────────────╯

But there's no such luck with Threads. We can fetch the status from mastodon.social:

let status3 = getStatus $status2.in_reply_to_id

$status3 | select uri created_at in_reply_to_id

╭────────────────┬──────────────────────────────────────────────────────────────────╮

│ uri │ https://www.threads.net/ap/users/mosseri/post/17928407810714224/ │

│ created_at │ 2023-12-28T05:15:53.000Z │

│ in_reply_to_id │ │

╰────────────────┴──────────────────────────────────────────────────────────────────╯

But threads.net seems to be misrepresenting that it has an ActivityPub (ActivityStream) object at that URL/URI:

getActivityStream $status3.uri

Error: nu::shell::network_failure

× Network failure

╭─[entry #182:1:1]

1 │ def getActivityStream [uri] {(

2 │ http get $uri

· ──┬─

· ╰── Requested file not found (404): "https://www.threads.net/ap/users/mosseri/post/17928407810714224/"

3 │ --headers [

╰────

I discovered that the URL (not URI) advertises that it is an "activity":

http get $status3.url | parse --regex '(<link .*?>)' | find -r activity | get capture0 | each { from xml }

╭──────┬──────────────────────────────────────────────────────────────┬────────────────╮

│ tag │ attributes │ content │

├──────┼──────────────────────────────────────────────────────────────┼────────────────┤

│ link │ ╭──────┬───────────────────────────────────────────────────╮ │ [list 0 items] │

│ │ │ href │ https://www.threads.net/@mosseri/post/C1YndCeuddr │ │ │

│ │ │ type │ application/activity+json │ │ │

│ │ ╰──────┴───────────────────────────────────────────────────╯ │ │

╰──────┴──────────────────────────────────────────────────────────────┴────────────────╯

… but that URL doesn't serve an Activity either. (It just ignores our Accept header and gives back a Content-Type: text/html; charset="utf-8".)

Summary

So what we seem to have here is Threads doing juuuust enough work to shove ActivityPub messages into Mastodon. But it's certainly not yet supporting enough of the ActivityStream/ActivityPub API to validate against spoofing attacks, as the W3C docs recommend:

Servers SHOULD validate the content they receive to avoid content spoofing attacks. (A server should do something at least as robust as checking that the object appears as received at its origin, but mechanisms such as checking signatures would be better if available). No particular mechanism for verification is authoritatively specified by this document, [...]

Is Mastodon just accepting those objects from a peered server without any sort of validation that they match what that peer serves for that activity? That would allow Threads to inject ads into (or otherwise modify) statuses that it pushes into Mastodon.

Or maybe Threads is only responding to ActivityStream requests if they're coming from a peer server that has been explicitly granted access? That would let them "federate" with peers on their terms, while not letting us plebs peek into the walled garden of data.

I'll reservedly concede that this may just be the current unfinished state of Threads's support for ActivityPub/ActivityStreams. But let's wait and see how much they actually implement, and how interoperable it ends up being.

Replace Bash With Deno

I'm starting to get a reputation as a bit of a Deno fanatic lately. But (if you haven't seen the title of this blog post) it might surprise you why I'm such a fan.

If you visit deno.com, the official documentation will tell you things like:

- "Web-standard APIs"

- "Best in class HTTP server speeds"

- "Secure by default."

- "Hassle-free deployment" with Deno Deploy

While all of those are great features, in my opinion the most underrated feature of Deno is that it's a great replacement for Bash (and Python/Ruby!) for most of your CLI scripting/automation needs. Here's why:

Deno is not Bash

Bash is great for tossing a few CLI commands into a file and executing them, but the moment you reach for a variable or an if statement, you should probably switch to a more modern programming language.

Bash is old and has accumulated a lot of quirks that not all programmers will be familiar with. Instead of removing the quirks, or warning about them, they're kept to ensure backward compatibility. But that doesn't make for a great programming language.

For example, a developer might write code roughly like:

if [ $x == 42 ]; then

echo "do something"

else

echo "do something else"

fi

Can you spot the problems?

- if

$xis undefined, the test expression will fail with an error. - if

$xis a string that includes spaces and/or a]character, the tester will likely return an error. - Neither above error stops the script from executing. It is equivalent to the test expression returning "false". You're just going to have unexpected behavior in the rest of your script.

- Worse, some values of

$xmay silently return false positives for this match. (I leave crafting them as an exercise to the reader. Share your favorites!)

These gotchas are even more dangerous when you're writing a script to manage files. Several versions of rm now have built-in protections against accidentally running rm -rf / because it is such a common mistake you can make in Bash and other shells when your variable expansion goes awry.

Do you need an array? As recently as a couple years ago (and possibly even still?) the default version of Bash on MacOS is old enough to not support them. If you write a bash script that uses arrays, you'll get different (wrong) behavior on MacOS.

Seriously, stop writing things in Bash!

Single File Development

My theory is that Bash scripts are the default because people want to just write a self-contained file to get a thing done quickly.

Previously, Python was what I would reach for once a task became unwieldy in Bash. But, in Python you might need to include a requirements.txt to list any library dependencies you use. And if you depend on particular versions of libraries, you might need to set up a venv to make sure you don't conflict with the same libraries installed system-wide at different versions. Now your "single-file" script needs multiple files and install instructions.

But in Deno you can include versioned dependencies right in the file:

import { range } from "https://deno.land/x/better_iterators@v1.3.0/mod.ts"

for (const value of range({step: 3}).limit(10)) {

console.log(value)

}

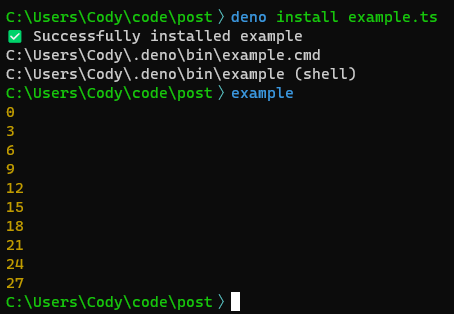

There is no install step for executing this script. (Assuming your system already has Deno.) The first time you deno run example.ts, Deno will automatically download and cache the remote dependencies.

You can even add a "shebang" to make the script executable directly on Linux/MacOS:

#!/usr/bin/env -S deno run

import ...

While Windows doesn't support shebang script files, the deno install command works on Windows/Linux/MacOS to install a user-local script wrapper that works everywhere.

Not only that, you can deno install and deno run scripts from any URL!

deno run https://deno.land/x/cliffy@v0.25.7/examples/ansi/color_themes.ts

But wait, there's more!

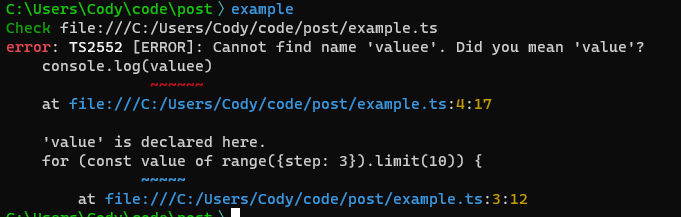

Deno makes TypeScript a first-class language instead of an add-on, as it is in Node.js, so the file you write is strongly typed right out of the box. This can help detect many sorts of errors that Bash, Python, Ruby, and other scripting languages would let through the cracks.

By default, Deno leaves type checking to your IDE. (I recommend the Deno plugin for VSCode.) The theory is that you've probably written your script in an IDE, so by the time you deno run it, it would be redundant to check it again. But, if you or your teammates prefer to code in plain text editors, you can get type-checking there as well by updating your shebang:

#!/usr/bin/env -S deno run --check

Now, Deno will perform a type check on a script before executing it. If the check fails, the script is never executed. This is much safer than getting half-way through a Bash or Python script and failing or running into undefined behavior because you typo'd a variable name, or had a syntax error.

Don't worry, the results of type checks are cached by Deno, so you will only pay the cost when the file is first run or modified.

Summary

While I have not been a fan of JavaScript in the past, Deno modernizes JavaScript/TypeScript development so much that I find myself very productive in it. It's replaced Bash and Python as my go-to scripting language. If you or your team are writing Bash scripts, I'd strongly recommend trying Deno instead!

Golang: (Still) Not a Fan

I try to be pragmatic when it comes to programming languages. I've enjoyed learning a lot of programming languages over the years, and they all have varying benefits and downsides. Like a lot of topics in Computer Science, choosing a language is all about tradeoffs.

I've seen too many instances of people blaming the programming language they're using for some problem when in fact it's just that they misunderstood the problem, or didn't have a good grasp of how the language worked. I don't want to be That Guy.

However, I still really haven't understood the hype behind Go. Despite using it a few times over the years, I do not find writing Go code pleasant. Never have I thought "Wow, this is so much nicer in Go than [other language]." If asked, I can't think of a task I'd recommend writing in Go vs. several other languages. (In fact, part of my reason for finally compiling my issues with Go into a blog post is so I'll have a convenient place to point folks in the future w/o having to rehash everything.)

Before I get into what I don't like about the language, I'll give credit where credit is due for several features that I do like in Go.

Stuff I Like

No Function Coloring

Go doesn't suffer from "Function Coloring". All Go code runs in lightweight "goroutines", which Go automatically suspends when they're waiting on I/O. For simple functions, you don't have to worry about marking them as async, or awaiting their results. You just write procedural code and get async performance "for free".

Defer

Defer is great. I love patterns and tools that let me get rid of unnecessary indentation in my code. Instead of something like:

let resource = openResource()

try {

let resource2 = openResource2()

try {

// etc.

} finally {

resource2.close()

}

} finally {

resource.close()

}

You get something like:

resource := openResource()

defer resource.Close()

resource2 := openResource2()

defer resource2.Close()

// etc.

The common pattern in other languages is to provide control flow that desugars into try/finally/close, but even that simplification still results in unnecessary indentation:

try (val fr = new FileReader(path); val fr2 = new FileReader(path2)) {

// (indented) etc.

}

I prefer flatter code, and defer is great for that.

Composition Over Inheritance

I've been hearing "[Prefer] composition over inheritance" since I was in university (many) years ago, but Go was the first language I learned that seemed to take it to heart. Go does not have classes, so there is no inheritance. But if you embed a struct into another struct, the Go compiler does all the work of composition for you. No need to write boilerplate delegation methods. Nice.

Story Time: Encapsulation

Now that we have the nice parts out of the way, I'll dig into the parts I have problems with. I'll start with a story about my experience with Go. Feel free to skip to the "TL;DR" section below for the summary.

Back in 2016, my team was maintaining a tool that needed to make thousand HTTP(S) requests several times a day. The tool had been written in Python, and as the number of requests grew, it was taking longer and longer to run. A teammate decided to take a stab at rewriting it in Go to see if we could get a performance increase. His initial tests looked promising, but we quickly ran into our first issues.

- Unbounded resource use

- Unbounded parallelism

- Lots of boilerplate for managing channels

The initial implementation just queried a list of all URLs we needed to fetch, then created a goroutine for each one. Each goroutine would fetch data from the URL, then send the results to a channel to be collected and analyzed downstream. (IIRC this is a pattern lifted directly from the Tour of Go docs. Goroutines are cheap! Just make everything a goroutine! Woo!) Unfortunately, creating an unbounded number of goroutines both consumed an unbounded amount of memory and an unbounded amount of network traffic. We ended up getting less reliable results in Go due to an increase in timeouts and crashes.

Given the chance to help out with a new programming language, I joined the effort and we ended up finding that we had two bottlenecks: First, our DNS server seemed to have some maximum number of simultaneous requests it would reliably support. But also (possibly relatedly), we seemed to be overwhelming our network bandwidth/stack/quota when querying ALL THE THINGS at the same time.

I suggested we put some bounds on the parallelism. If I were working in Java, I'd reach for something like an ExecutorService, which is a very nice API for sending tasks to a thread pool, and receiving the results. We didn't find anything like that in Go. I guess the lack of generics meant that it wasn't easy for anyone to write a generic high-level library like that in Go. So instead, we wrote all the boilerplate channel management ourselves. Because we had two different worker pools to manage, we had to write it twice. And we had to use low-level synchronization tools like WaitGroups to manually manage resource.

Disillusioned by how gnarly a simple tool turned out, I did some searching to find out if Go had plans to add Generics. At the time, that was a vehement "No". Not only did the language implementors say it was unnecessary (despite having hard-coded generics-equivalents for things like append(), make(), channels, etc.), but the community seemed downright hostile to people asking about it.

At that point I'd already played with Rust enough to have a fair idea that such a thing was possible. In a weekend or two, I wrote a Rust library called Pipeliner, which handles all of the boilerplate of parallelism for you. Behind the scenes, it has a similar implementation to our Go implementation. It creates worker pools, passes data to them through channels, and collects all the results (fan-out/fan-in). Unlike the Go code, all that logic gets written and tested in a separate, generic library, leaving our tool to just contain our high-level business logic. Additionally, this was all implemented atop Rust's type-safe, null-safe, memory-safe IntoIterator interface. All of our application logic could be expressed more succinctly and safely, in roughly:

let results = load_urls()?

.with_threads(num_dns_threads, do_dns_lookup)

.with_threads(num_http_threads, do_http_queries);

for result in results {

// etc.

}

Go 1.18 Generics

Recently, I interviewed with a company that wrote mostly/only Go. "No problem," I thought. "I'm pragmatic. Go can't be as bad as I remember. And it's got generics now!"

To brush up on my Go, and learn its generics, I decided to port Pipeliner "back" into Go. But I didn't get far into that task before I hit a road block: Go generics do not allow generic parameters on methods. This means you can't write something like:

type Mapper[T any] interface {

func Map[Output](mapFn func(T) Output) Mapper[Output]

}

Which means your library's users can't:

zs := xs.Map(to_y).Map(to_z)

This is due to a limitation in the way that interfaces are resolved in Go. It feels like a glaring hole in generics which other languages don't suffer from. "I'm pretty sure TypeScript has a better Generics implementation than this", I thought to myself. So I set off to write a TypeScript implementation to prove myself wrong. I failed.

IMO, good languages give library authors tools to write nice abstractions so that other developers have easy, safe tools to use, instead of having to rewrite/reinvent boilerplate all the time.

TL;DR

- Despite the creators of Go and much of the Go community saying there was no need for Generics, they were finally added in Go 1.18.

- But the generics are still so basic that they can't support fairly standard use cases for generics. For example, in 2023, Go still doesn't have a standard Iterable/Iterator interface. (And the proposals at that link don't look promising to me.)

- Without good support for generics, it's difficult to write good higher-level libraries that can abstract complex boilerplate away from developers.

- As a result you either get poorer (unsafe, leaky) APIs, or developers just rewriting the same boilerplate code all the time (which is error-prone).

Other Dislikes

OK this post is already really long, so I'm just going to bullet-point some of my other complaints:

- oddly low-level for a modern GC'd language. Why do I need to

foo = append(foo, bar)instead of justfoo.push(bar)? - Interfaces are magic. Does

FooimplementBar? Better check all its methods to see if they matchBar's. You can't just declareFoo implements Barand have the compiler check it for you. (My favorite syntax for this is Rust's:impl Bar for Foo { … }, which explicitly groups only the methods required for implementing a particular interface, so it's clear what each method is for. - The automatic composition/delegation that I mentioned above is cool, but I don't think there exists a way to mark an

overrideas such so that the compiler can check it for you. - The compiler is annoyingly opinionated. If you have an unused variable, your code just won't compile. Even if the variable is unused because you've just commented out a chunk of code to debug something. Or when you're scaffolding something and want to test your progress before it's complete. Playing dumb whack-a-mole w/ errors that don't matter while experimenting is not the best use of my time. Save it for lints.

- Go isn't null-safe. Why am I getting null dereferences at runtime? Rust, Kotlin, and TypeScript have had this for a while now.

- Go has been described by Rob Pike as "for people not capable of understanding a brilliant language".

- This sort of elitism rubs me the wrong way.

- I believe you can have a "brilliant language" that enables people of many different levels to be productive. In fact, having tools to make safe APIs means that people with more interest/time can write libraries in that language that save others time and duplicated effort.

Deno Embedder

I've really been enjoying writing code in Deno. It does a great job of removing barriers to just writing some code. You can open up a text file, import some dependencies from URLs, and deno run it to get stuff done really quickly.

One nice thing is that Deno will walk all the transitive dependencies of any of your code, download them, and cache them. So even if your single file actually stands on the shoulders of giant( dependency tree)s, you still get to just treat it as a single script file that you want to run.

You can deno run foo.ts or deno install https://url.to/foo.ts and everything is pretty painless. My favorite is that you can even deno compile foo.ts to bundle up all of those transitive dependencies into a self-contained executable for folks who don't have/want Deno.

Well... almost.

This doesn't work if you're writing something that needs access to static data files, though. The problem is that Deno's cache resolution mechanism only works for code files (.ts, .js, .tsx, .jsx and more recently, .json). So if you want to include an index.html or style.css or image.jpg, you're stuck with either reading it from disk or fetching it from the network.

If you fetch from disk, deno run <remoteUrl> doesn't work, and if you fetch from the network, your application can't work in disconnected environments. (Not to mention the overhead of constantly re-fetching network resources every time your application needs them.)

In FeoBlog, I've been using the rust-embed crate, which works well. I was a bit surprised that I didn't find anything that was quite as easy to use in Deno. So I wrote it myself!

Deno Embedder follows a pattern I first saw in Fresh: You run a development server that automatically (re)generates code for you during development. Once you're finished changing things, you commit both your changes AND the generated code, and deploy that.

In Fresh's case, the generated code is (I think?) just the fresh.gen.ts file which contains metadata about all of the web site's routes, and their corresponding .tsx files.

Deno Embedder instead will create a directory of .ts files containing base64-encoded, (possibly) compressed copies of files from some other source directory. These .ts files are perfectly cacheable by Deno, so will automatically get picked up by deno run, deno install, deno compile, etc.

I'm enjoying using it for another personal project I'm working on. I really like the model of creating a single executable that contains all of its dependencies, and this makes it a lot easier. Let me know if you end up using it!

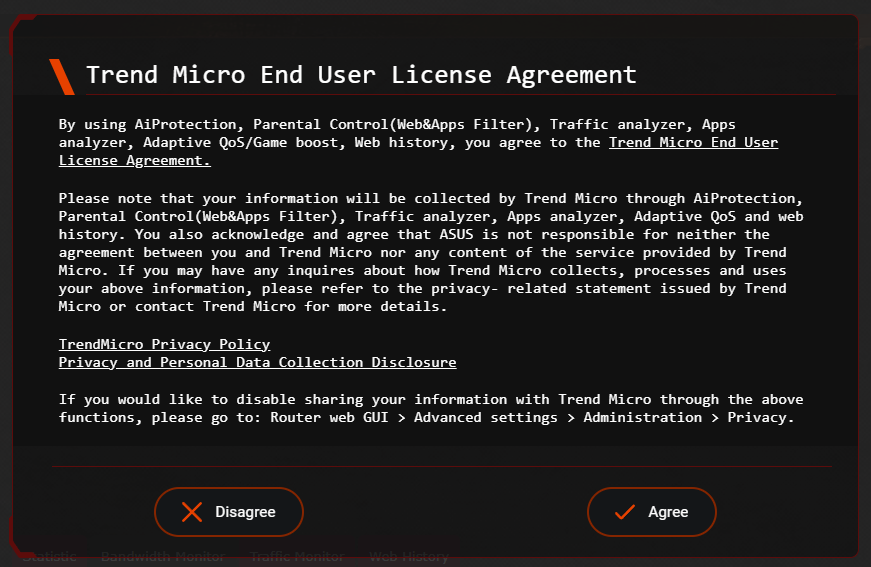

My New ASUS Router Wants To Spy On Me

After a recommendation from coworkers, and reading/watching some reviews online, I decided to get a new router. I purchased the "ASUS Rapture GT-AXE11000 WiFi6E" router in particular for its nice network analytics and QoS features.

On unpacking and setting up said router, I'm disappointed to find that the features I purchased the router for require that I give network analytics data over to a third-party.

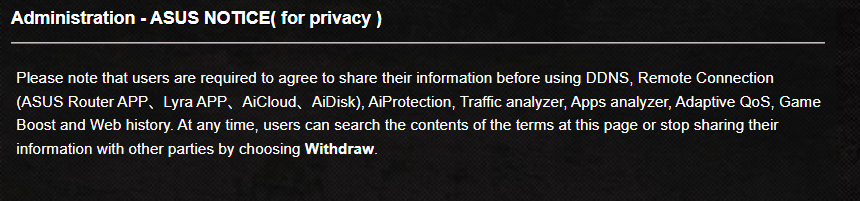

The last line of that popup says:

If you would like to disable sharing your information with Trend Micro through the above functions, please go to: Router web GUI > Advanced settings > Administration > Privacy

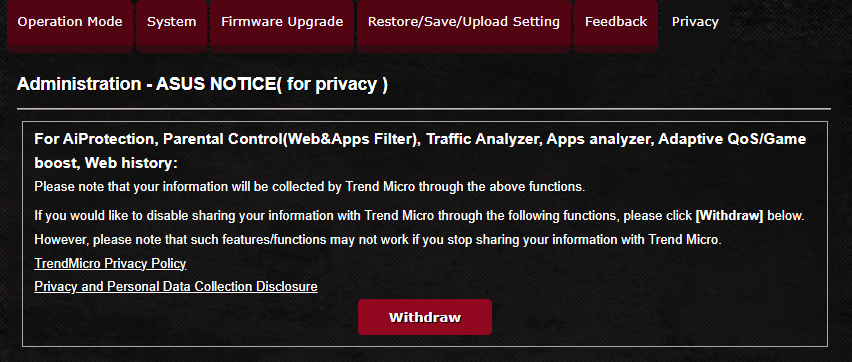

For a brief few seconds I was naive enough to think that the issue was just that this behavior was opt-out instead of opt-in. So I headed over to the Privacy settings to opt out.

However, please note that such features/functions may not work if you stop sharing your information with Trend Micro.

"May not work" my ass! If you withdraw consent it just disables the features entirely, and then tells you:

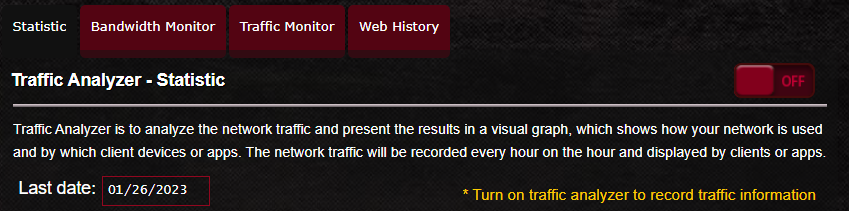

Please note that users are required to agree to share their information before using [the features that I bought this router for].

At least now (after a couple router restarts to apply settings) they're telling the truth. This is not an "option", it's a requirement.

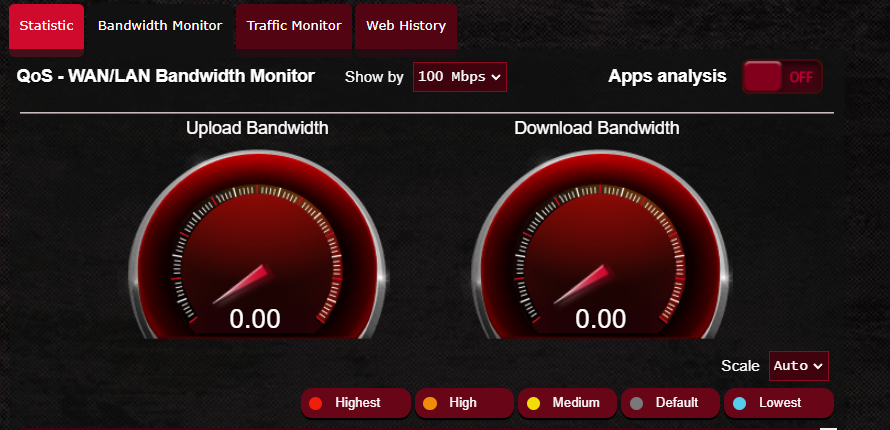

If I go back to the "Statistic" or "Bandwidth Monitor" tabs, they're now disabled:

I'm considering returning this router for one that won't try to spy on me. There is NO reason for this kind of thing in my home router, a device which should be prioritizing my own security and privacy. And certainly not for features like QoS or bandwidth usage monitoring.

Does anyone have recommendations? I want something that:

- Has good network analytics so that when network issues occur, I can determine if it's due to one of my devices, or my ISP.

- Good QoS (preferably one that can adjust to varying bandwidth availability throughout the day without me having to constantly toggle bandwidth caps)

- Doesn't require consenting to third-party data collection.

I do not trust myself to write software without some form of type checking. And I prefer more typing (ex: nullability, generics) when it is available.

This comes from a long history of realizing just how many errors end up coming down to type errors -- some piece of data was in a state I didn't account for or expect, because no type system was present to document and enforce its proper states.

Relatedly, I trust other programmers less when they say they do not need these tools to write good code. My immediate impression when someone says this is that they have more ego than self-awareness. In my opinion, it's obvious which of those makes for a better coworker, teammate, or co-contributor.

Fixing your code before the weekend is like cleaning your house before you go on vacation. So much nicer to come back to. 😊

Me: I dislike that the usual software engineer career path is to move into management. I just want to write cooode!

Also me: (leading standup today, being taskmaster, making sure we capture details into tickets, unblock people, shuffle priorities from Product Mgmt, volunteering to help other devs w/ something they're stuck on) I am actually quite good at this.

😑

YAGNI

YAGNI. AIYAGNI,YWKWYNUYNI.

Not (Yet) Banned: FeoBlog

So Twitter came out with a great new feature today: You're not allowed to link to other social media web sites.

What is a violation of this policy?

At both the Tweet level and the account level, we will remove any free promotion of prohibited 3rd-party social media platforms, such as linking out (i.e. using URLs) to any of the below platforms on Twitter, or providing your handle without a URL:

- Prohibited platforms:

- Facebook, Instagram, Mastodon, Truth Social, Tribel, Post and Nostr

- 3rd-party social media link aggregators such as linktr.ee, lnk.bio

It's a laughable attempt to stop the bleeding of people fleeing to other social networks, and it's going to Streisand Effect itself into the (figurative) Internet Hall of Fame. Most of the point of Twitter for many is finding and posting links to interesting stuff online.

What's next, a ban on "free promotion of prohibited 3rd-party news sources" that point out what a ridiculous policy this is? (Though, I suppose that's not far from what they're already doing -- banning reporters who unfavorably cover Musk.)

FeoBlog is not yet banned, of course, because it's not on anyone's radar. What can I do to get some more users and get it noticed?

If you want to give it a try, it's open source software, so you can download it and run your own server. Or, if you don't want to bother with all that, ping me and I'll get you set up with a free "account" on my server. :)